It’s 11 PM. Your server dashboards are a sea of reassuring green. CPU: 68%. Memory: healthy. Network: nominal. Yet, on the operations lead’s phone, the real-time revenue graph has just developed a mysterious, jagged dip. In the last ten minutes, approximately £15,000 worth of confirmed orders have silently vanished from the business ledger.

The 2 AM war room scramble begins. The payment gateway logs show successful transactions. The order database reveals no deadlock errors. The inventory system reports clean commits. Each isolated system claims perfect health, but the orders are indisputably gone. After six frantic hours, the culprit is found: an 8-millisecond timeout in a third-party SMS service—a "normal failure" buried and ignored by every individual system's logs.

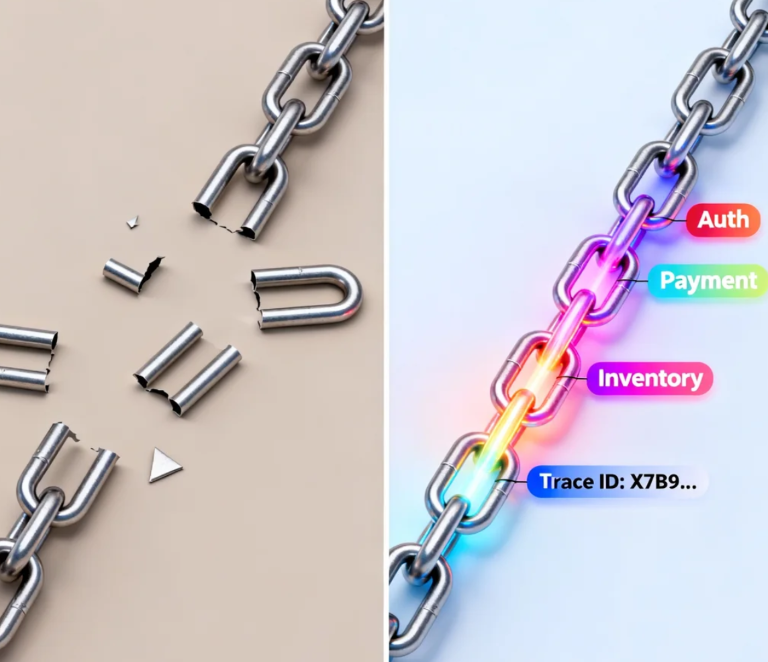

This is the central paradox of modern distributed systems: as each component becomes more monitored and robust, the critical business pathways woven from them become more fragile and opaque. We've mastered monitoring the machinery but lost sight of the mission.

The Twilight of Monitoring and the Dawn of Observability

What most teams call "monitoring" is essentially a checklist for known-health metrics: CPU, memory, disk I/O, error counts. These are like your car's dashboard, telling you the RPM and fuel level but utterly useless for explaining why your speed suddenly dropped on the motorway while all gauges read normal.

Observability is a different paradigm. It accepts that in complex systems, failure modes are infinite and unpredictable. Its core power isn't checking predetermined boxes; it's the ability to ask novel, unforeseen questions when the system behaves oddly and get clear answers.

A single e-commerce order's journey can span 15+ distinct microservices: authentication, product details, cart, promotions, inventory, payment gateway, order creation, logistics, notifications... Each with its own data store, cache, and external dependencies.

Traditional monitoring builds tall walls around each vertical. Observability constructs transparent corridors between them all.

The Golden Signals of Your Business Workflows

Research from the Cloud Native Computing Foundation indicates that in microservices architectures, over 70% of production failures originate not from a single service crashing, but from low-probability, aberrant interactions between multiple healthy services. These "perfect storm" scenarios are invisible to any single service's dashboard but lethal to the business pathway.

This demands we move beyond infrastructure metrics to define the "Golden Signals" of our business pathways:

Pathway Success Rate: Not the percentage of HTTP 200 responses, but the success rate of the complete business transaction—from "user clicks buy" to "order confirmation received." The shocking truth is that systems boasting 99.9% uptime often have a pathway success rate of just 95-97%, meaning 3-5 out of every 100 orders mysteriously dissipate.

Segment Latency Heatmaps: Decompose the purchase flow into 5-7 key stages (page load, cart update, payment processing, etc.) and measure latency distribution for each. You'll often find the P99 latency (slowest 1%) for the payment stage is 50x longer than the average, directly causing timeouts and data loss.

Business Exception Correlation: Systems generate hundreds of technical error codes, but what matters are the dozen or so business failure scenarios: inventory conflicts, invalid promo codes, unsupported shipping zones. Observability uncovers hidden patterns like, "Orders from users in Berlin paying with Method X fail 8% of the time due to a silent currency rounding error in a legacy service."

Distributed Tracing: The X-Ray for Your System

The technical cornerstone of observability is distributed tracing. It works by stamping each business request with a globally unique Trace ID as it enters your system. This ID propagates through every service call, like a patient's hospital wristband ensuring all tests and notes are attached to a single record.

Visualising all service calls for a single purchase on a timeline produces a flame graph. A healthy transaction looks like orderly columns; a failing one looks like a collapsed building—one service call stretches abnormally, or unexpected branches appear.

By comparing flame graphs of successful versus vanished orders, our earlier team found the £15,000 bug: after payment, the order creation step made three parallel calls—update loyalty points, send SMS, generate invoice. The SMS service, due to a transient provider issue, timed out after 8ms in 2 out of every 10,000 calls.

The overall process timeout was set to 100ms, so this "normal" failure was ignored. But it triggered a forgotten piece of legacy logic: a failure to send the SMS would cause the entire order creation transaction to silently roll back. The payment succeeded, but the order was erased.

The Three-Layer Observability Architecture

Implementing true business observability is a three-layer endeavour, requiring more organisational shift than technical tooling.

Layer 1: Instrumentation & Context Enrichment

Instrument key services with lightweight tracing libraries. Crucially, enrich these traces with business context: user tier, cart value, product category, location. This binds technical data directly to business impact.

Layer 2: Intelligent Analysis & Pattern Discovery

Use algorithms to analyse trace data for anomalies. For example, the system should flag if order failure rates for "premium users purchasing high-margin items" spike from 1% to 8% between 2-4 PM, without waiting for an overall threshold breach.

Layer 3: Feedback Loops & Architectural Refinement

The ultimate goal isn't faster debugging but fewer failures. Analysing failure patterns drives systemic improvements. The fix for the SMS bug wasn't a longer timeout; it was decoupling critical business logic from non-critical notifications. Order creation must not depend on SMS success.

The Counterintuitive ROI of Observability

The common objection is cost. Full tracing can add 5-10% overhead, and storing this data requires resources. Consider this: a mid-market e-commerce platform reduced its mean time to resolution for checkout failures from 4.5 hours to 28 minutes within six months of implementing observability.

More crucially, they uncovered three categories of hidden business leaks:

A coupon stacking bug costing an estimated $300,000 annually.

Silent failures in a specific logistics corridor driving 18% of related customer complaints.

A UI anomaly on mobile reducing checkout conversion by 2.3 percentage points.

The value recovered was orders of magnitude greater than the implementation cost. Observability transforms from a cost centre into a profit protection and discovery engine.

Your Four-Step Observability Initiative

If mysterious failures plague your operations, start here:

Define One Critical Business Pathway: Don't boil the ocean. Choose the single most important journey—"new user sign-up and first purchase" or "core product checkout." Map its 3-5 key milestones.

Implement Lightweight Distributed Tracing: Start with an open-source tool like Jaeger. Instrument the entry points of your chosen pathway. Day one doesn't need to be perfect; it needs to make the pathway visible. Ensure the Trace ID flows from the frontend to the final database call.

Build a Business-Level Dashboard: Create a single pane showing: 1) A funnel of your pathway's milestone conversion rates, 2) A latency heatmap for each stage, 3) A breakdown of business exception types. Make this dashboard ubiquitous for the team.

Conduct Weekly Observability Reviews: This is for learning, not blame. Randomly select 5-10 failed requests and examine their full traces as a team. Ask: "What information would have prevented this?" Use the answers to refine your architecture and code.

At 4 AM in that war room, when the flame graph comparison pinpointed the 8ms timeout, no one cheered. They sat in silence, staring at the statistics showing 127 similar incidents over the past three months. They had been firefighting in a dark maze, never thinking to illuminate it.

The CTO closed the laptop and offered the night's most valuable insight: "From today, our success metric shifts from '99.99% uptime' to 'every successful payment must have a deterministic outcome.'"

Observability doesn't offer flashier dashboards; it offers a new philosophy: in complex systems, you can't prevent every failure, but you can ensure no failure occurs in silence. The next time an order vanishes, your system won't just sit there—it will raise its hand and clearly state the exact location, time, and reason.

Your architecture deserves that clarity. Your business requires that certainty. Start by illuminating one single, critical pathway.