Is Your Site Under “Attack” by AI Crawlers? Leverage CDN to Shield Your Server and Save Bandwidth

Let me paint you a picture. It’s 3 AM. Your server’s CPU utilization graph, usually a sleepy hill, suddenly becomes a sheer cliff face rocketing from 30% to 99%. Your bandwidth monitor flashes red, showing a massive, unexplained outflow of data. Yet, your analytics dashboard is quiet—no surge in real visitors, no sales spike. This isn't a DDoS attack in the classic sense. It’s something more pervasive and less understood: your site is being systematically harvested by AI crawlers.

I spoke with a developer last month running a niche API service. "My bill for egress bandwidth tripled in a quarter," he said, frustrated. "Over 60% of my 2 million monthly requests were from crawlers I’d never heard of, all training their models on my data. They don't click 'buy.' They just take."

This is the silent tax of the AI era. While we marvel at ChatGPT's poetry or Midjourney's art, an insatiable hunger for training data fuels a global crawl, and your server is on the menu. The old rules of web scraping no longer apply.

The New Data Flood: Why This Isn't Your Grandpa's Googlebot

First, let's discard a comforting myth. This isn't just Googlebot politely indexing your site. Modern AI crawlers are a different breed. They are vast in scale, sophisticated in evasion, and agnostic to your server's limits.

Traditional crawlers from search engines are disciplined. They respect robots.txt, space out requests, and primarily seek links. AI crawlers, particularly those from well-funded labs and startups racing to build the next big model, operate under different imperatives. Their goal is comprehensive data acquisition—every article, product description, forum thread, and code snippet.

They deploy from massive, distributed cloud IP ranges, making simple IP blocking a game of whack-a-mole. They rotate user agents to mimic real browsers and can execute JavaScript to render pages like a human would. Some even solve basic CAPTCHAs. Their behavior is engineered for one thing: extraction efficiency, not politeness.

Why Your Old Defenses Are Obsolete (and What to Do Instead)

So you think you're covered? Let's check the usual playbook:

Rate-limiting by IP? They'll cycle through thousands.

User-agent blocking? Their list is updated daily.

Simple CAPTCHAs? Often a solvable speed bump.

The fundamental mismatch is this: you're trying to distinguish a "bad bot" from a "good human" based on simple request signatures. AI crawlers are designed explicitly to blur that line. Your application's logic—meant for serving users—is now wasting precious cycles on an unwinnable identification war.

This leads to the core strategic mistake: trying to fight this battle at your origin server. Every request you process, whether it's serving a page to a customer or a futile 403 error to a crawler, consumes CPU, memory, and—most expensively—bandwidth. Your origin becomes the bottleneck, the chokepoint where you pay the price for the entire internet's data hunger.

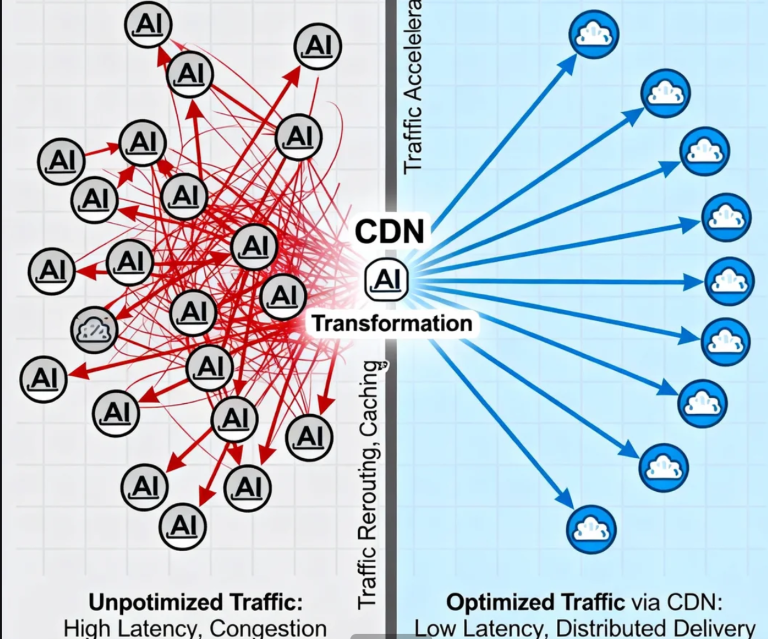

The Intelligent Buffer: How CDN Becomes Your Strategic Shield

This is where a paradigm shift saves you. Instead of bearing the brunt at your origin, you move the front line forward to a globally distributed Content Delivery Network (CDN). Think of it not just as a cache, but as an intelligent, programmable shield. Here’s how it works in three layered defenses:

1. The Cache Wall: Absorbing the Brunt Force

The most immediate relief comes from caching. A well-configured CDN will serve static assets (images, CSS, JS) and even cacheable HTML directly from its edge nodes. When an AI crawler requests your "About Us" page for the thousandth time, the CDN serves a cached copy without a single byte of traffic hitting your origin server. This alone can intercept 70-80% of repetitive, low-value crawl traffic. It’s not a barrier; it’s a sponge.

2. The Rule Engine: Precision Traffic Control

Modern CDNs offer powerful, configurable rule sets (like Cloudflare's WAF Rules or AWS WAF). This is where you get surgical. You can create logic that identifies likely crawlers not by who they say they are, but by what they do:

Is a single IP requesting hundreds of disparate pages per minute?

Is a session accessing pages without ever loading the main CSS or image files?

Are requests coming in patterns that no human would follow?

You can then choose an action: challenge, slow down, or block. The key is that this decision and computation happen at the edge, milliseconds away from the crawler, not on your server.

3. The AI vs. AI Layer: Behavioral and ML-Powered Protection

This is the cutting edge. Leading CDNs now integrate machine learning models that analyze traffic patterns in real-time across their entire network. They can detect anomalies and emerging bot signatures you'd never see on your single server. They identify the "low-and-slow" crawlers that spread requests over weeks. This is defensive AI countering offensive AI, and it's a service you subscribe to, not a system you have to build and maintain.

The Uncomfortable Truth: Most AI Crawlers Aren't "Malicious"

Here's the perspective shift. We must stop thinking of this traffic as an "attack" and start seeing it as unsanctioned resource consumption. Most of these crawlers aren't hackers; they're data scientists building legitimate models. Their goal isn't to crash your site, but their method is to take what they need with minimal regard for your costs.

This changes the strategy. Your goal isn't total war, but cost-effective resource management. You want to allow reasonable, well-behaved indexing (which still has SEO value) while aggressively filtering out the abusive consumption that drains your wallet. A CDN lets you implement this nuanced policy. You can serve cached content to crawlers, throttle their connection speeds, or route them to a stripped-down version of your site, all while preserving the pristine experience for your paying customers.

The Bottom Line: It's a Financial Decision

Let's talk numbers, because this is ultimately about your bottom line. Cloud egress bandwidth—the cost of data leaving your server—is expensive. A mid-sized site seeing tens of terabytes of unwanted crawl traffic per month can face bills in the hundreds or thousands of dollars.

Implementing a smart CDN strategy flips the economics. Consider this real scenario from a SaaS company:

Problem: Monthly bandwidth bill: ~$900. Analysis showed ~65% was AI/aggressive bot traffic.

Solution: Implemented a CDN with aggressive caching and bot management rules. CDN service cost: $200/month.

Result: Origin bandwidth bill dropped to ~$300. Net monthly saving: $400. Plus, their origin server performance improved by 40%, reducing the need for an imminent upgrade.

The ROI isn't just defensive; it's performance and financial. You're trading a variable, unpredictable cost (runaway bandwidth) for a fixed, predictable one (a CDN subscription).

Your 4-Step Action Plan

Diagnose: Don't guess. Use your server logs or a CDN's analytics to audit your traffic. Tools can help you segment and identify bot traffic. What percentage is "good" vs. "bad"? What's it costing you?

Choose Your Shield: Select a CDN provider that offers robust security and bot management features, not just basic caching. Look for customizable rule engines and behavioral analysis capabilities.

Deploy Strategically: Start by enforcing caching policies for all static content. Then, implement graduated rules: challenge suspicious requests, rate-limit aggressive IP blocks, and only outright block the most egregious offenders. Monitor closely to avoid false positives.

Iterate: This is an ongoing arms race. Review your CDN's security reports regularly. New crawler signatures emerge constantly. Treat your CDN configuration as a living part of your infrastructure.

The internet's landscape has fundamentally changed. Your server is no longer just a destination for customers; it's a potential data mine for the AI revolution. You have every right to protect your resources and control your costs.

Deploying a CDN as an intelligent shield isn't about walling off your garden. It's about installing a smart irrigation system that ensures the water—your server's resources—flows to the plants you care about, not evaporating into the air or flooding land you never intended to cultivate.

It transforms an invisible, escalating cost into a manageable, optimized layer of your stack. In the age of AI, the most strategic server investment you can make might just be the one that sits in front of it.